Tool calling with Apple Intelligence

The foundation models we now have access to with the foundations model framework are great at a lot of queries - but what if we want to augment them with more?

Getting Started

We can use tools to provide extra data, such as a user's health information from HealthKit, or calling your own API.

In our case, we're going to make a simple tool to provide data for the menu at a coffee shop. This would help a shop answer queries about what coffee someone should order, with a limit to only show coffees that the shop actually sells.

The Tool API is simple, and flexible, so lets take a look.

@Observable

final class CoffeeTool: Tool {

public let name = "coffeetool"

public let description = "Provides coffee options available in our coffee shop."

@Generable

struct Arguments {

@Guide(description: "The users original query")

var naturalLanguageQuery: String

}

public init() { }

}@Observable

final class CoffeeTool: Tool {

public let name = "coffeetool"

public let description = "Provides coffee options available in our coffee shop."

@Generable

struct Arguments {

@Guide(description: "The users original query")

var naturalLanguageQuery: String

}

public init() { }

}First, we add a name and description to help refer to it in our system prompt. Next, we add an Arguments struct which contains all the data you'll be providing to your tool. Note that this is marked as Generable, just like output models from the framework.

There's only one method we need to implement, call, so let's dig into that.

public func call(arguments: Arguments) async throws -> ToolOutput {

return ToolOutput("""

These are all available coffees.

\(Coffee.all.map(\.lmString))

"""

)

} public func call(arguments: Arguments) async throws -> ToolOutput {

return ToolOutput("""

These are all available coffees.

\(Coffee.all.map(\.lmString))

"""

)

}It's a very simple API for something so powerful. You get provided with the arguments ( that are populated by the foundation model ), and then you just return some data via ToolOutput.

ToolOutput has a few options to us, but I've found that simply returning the data in a string gives better results. Think of this like the data you'd give to ChatGPT or Claude - you just give it a big string, and it returns a big string. Here, I'm returning a simple array of coffees mapped into a string for the model to understand.

struct Coffee {

let name: String

let summary: String

var lmString: String {

"""

name: \(name)

summary: \(summary)

"""

}

}struct Coffee {

let name: String

let summary: String

var lmString: String {

"""

name: \(name)

summary: \(summary)

"""

}

}This could easily be expanded to hit a remote API, call local system frameworks, and more.

Setting up the Model & Tool

Now we have our tool, we need to tell the model to use it.

Giving the model access to this is easy - just add the tool to the LanguageModelSession.

You'll also need to make sure in your instructions you're explicit to use the tool. If you don't, it will often ignore it and use its own knowledge base.

@Observable

@MainActor

final class CoffeeIntelligence {

var session: LanguageModelSession

private let coffeeTool: CoffeeTool

private(set) var recommendation: CoffeeRecommendation.PartiallyGenerated?

private var error: Error?

init() {

let coffeeTool = CoffeeTool()

self.session = LanguageModelSession(

guardrails: .default,

tools: [coffeeTool],

instructions: Instructions {

"Your job is to recommend a coffee for the user, available at the shop Alex's Coffee."

"You must take into account all details of their query, such as milk preference and taste"

"""

Always use the coffeetool tool to find coffees available at Alex's Coffee, to recommend based on their query, as these are the ones we actually sell.

"""

})

self.coffeeTool = coffeeTool

}

}@Observable

@MainActor

final class CoffeeIntelligence {

var session: LanguageModelSession

private let coffeeTool: CoffeeTool

private(set) var recommendation: CoffeeRecommendation.PartiallyGenerated?

private var error: Error?

init() {

let coffeeTool = CoffeeTool()

self.session = LanguageModelSession(

guardrails: .default,

tools: [coffeeTool],

instructions: Instructions {

"Your job is to recommend a coffee for the user, available at the shop Alex's Coffee."

"You must take into account all details of their query, such as milk preference and taste"

"""

Always use the coffeetool tool to find coffees available at Alex's Coffee, to recommend based on their query, as these are the ones we actually sell.

"""

})

self.coffeeTool = coffeeTool

}

}All we have to do now is get the user's prompt into our CoffeeIntelligence.

func generate(prompt: String) async throws {

do {

let stream = session.streamResponse(

to: Prompt {

"""

Use the coffeetool tool to find coffees to recommend based on their query, which is below, as these are the ones we actually sell.

Find a coffee for the user based on their query, which is below.

\(prompt)

"""

},

generating: CoffeeRecommendation.self,

includeSchemaInPrompt: false, options: GenerationOptions(sampling: .greedy)

)

for try await partialResponse in stream {

recommendation = partialResponse

}

} catch {

self.error = error

}

}func generate(prompt: String) async throws {

do {

let stream = session.streamResponse(

to: Prompt {

"""

Use the coffeetool tool to find coffees to recommend based on their query, which is below, as these are the ones we actually sell.

Find a coffee for the user based on their query, which is below.

\(prompt)

"""

},

generating: CoffeeRecommendation.self,

includeSchemaInPrompt: false, options: GenerationOptions(sampling: .greedy)

)

for try await partialResponse in stream {

recommendation = partialResponse

}

} catch {

self.error = error

}

}You'll notice I was explicit again in the prompt - I've had mixed results without doing this.

You'll notice we're using partial responses - this is a built-in tool to help stream outputs, just as you're used to with other chat tools. The stream gets updated as the model generates, and you can either show it when it's all finished, or show it generating line by line.

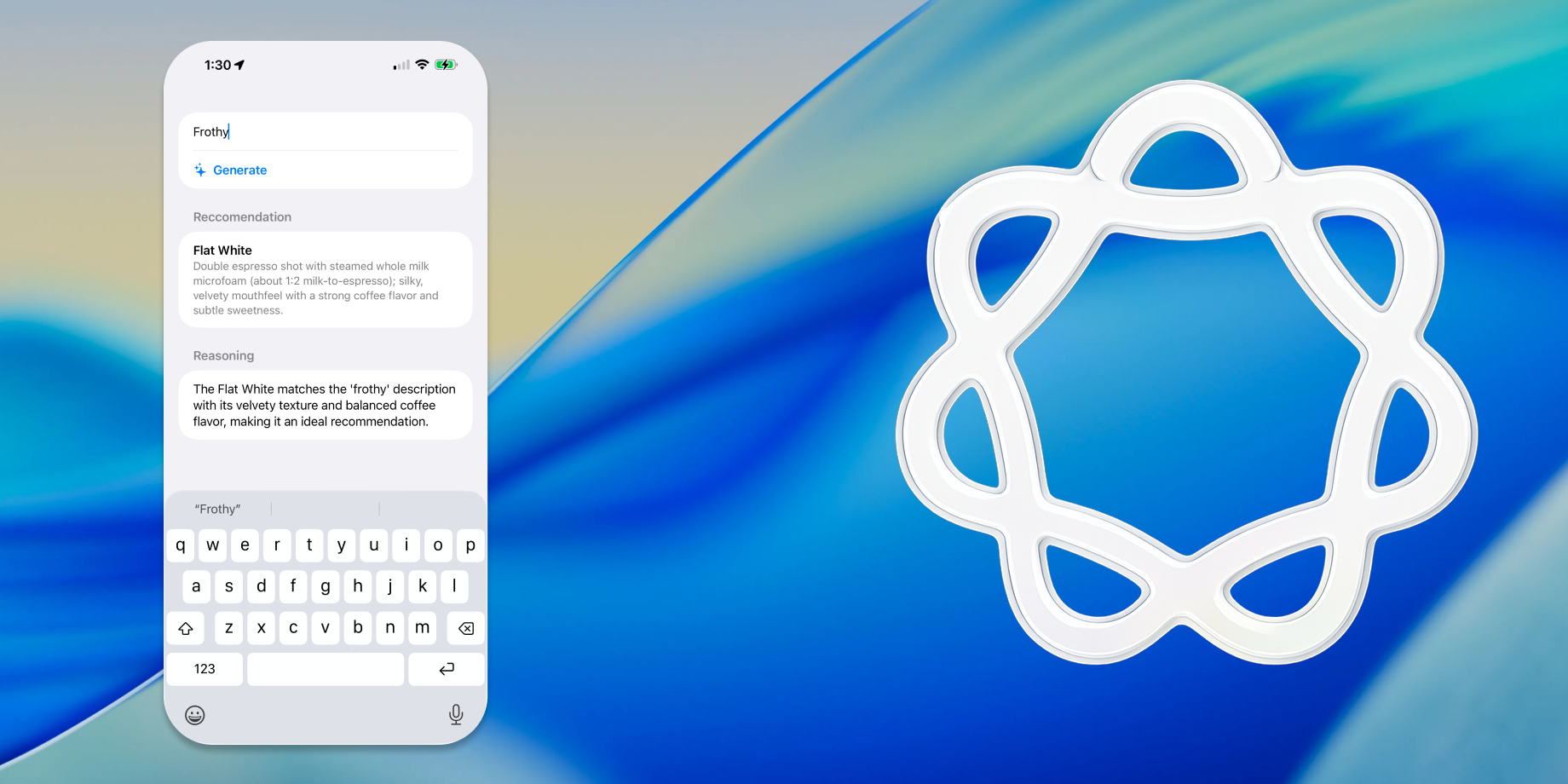

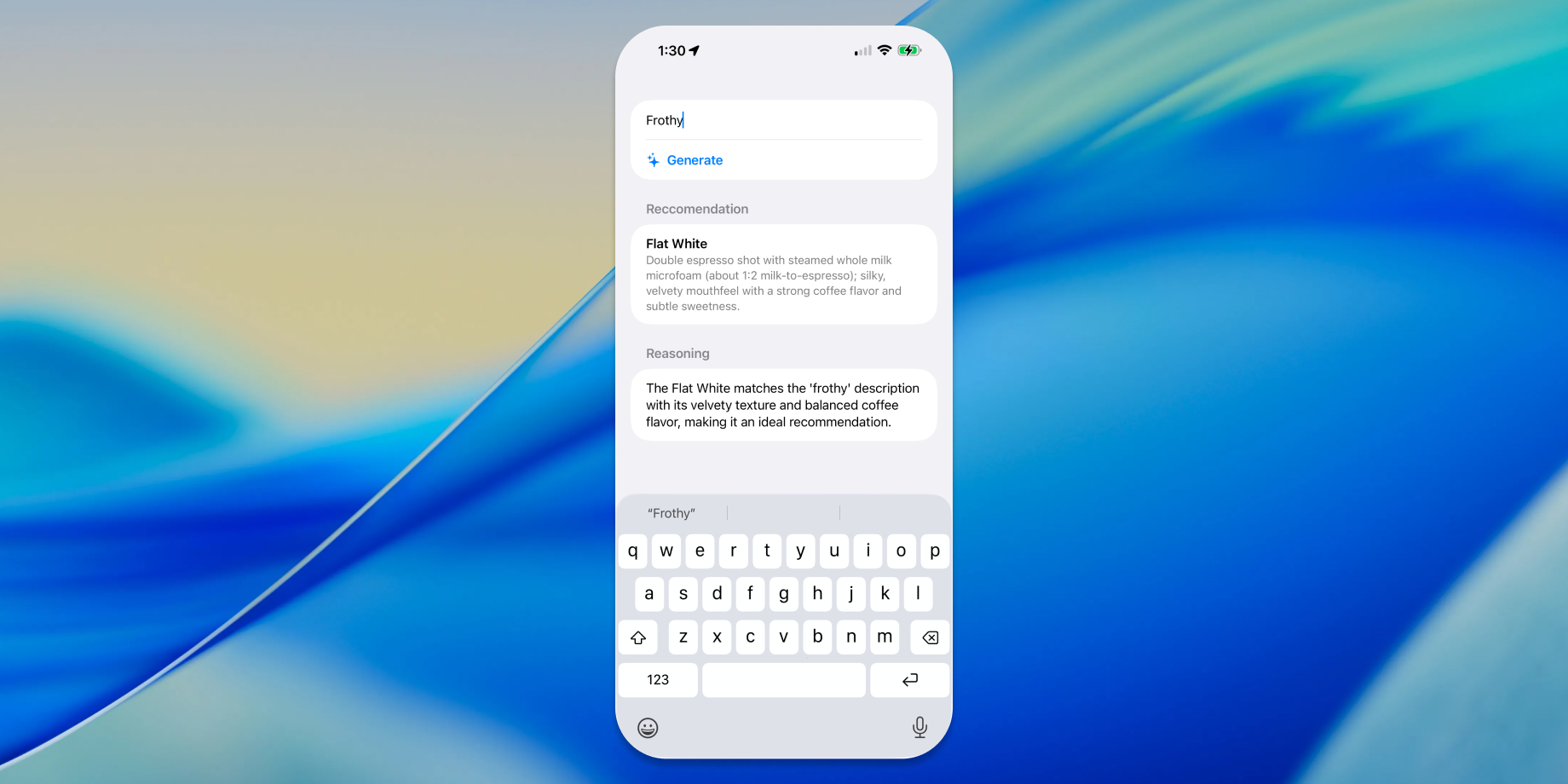

Throwing it all together

Using this model to make a small app is delightfully easy. You just hold a reference to CoffeeIntelligence in your view, allow the user to enter some text, and watch the response.

We're monitoring the partial response, so this will fade in as it generates it. The smooth animation adds a nice touch!

import SwiftUI

import FoundationModels

struct ContentView: View {

@State var coffeeIntelligence: CoffeeIntelligence = .init()

@State var prompt: String = ""

var body: some View {

List {

TextField("How do you like your coffee?", text: $prompt)

Button(action: {

Task {

try? await coffeeIntelligence.generate(prompt: prompt)

}

}, label: {

HStack {

Image(systemName: "sparkles")

Text("Generate")

Spacer()

if coffeeIntelligence.session.isResponding {

ProgressView()

}

}

.font(.headline.weight(.semibold))

})

.disabled(coffeeIntelligence.session.isResponding)

if let recommendation = coffeeIntelligence.recommendation {

Section(header: Text("Recommendation"), content: {

VStack(alignment: .leading) {

Text(recommendation.name ?? "")

.font(.headline)

.foregroundStyle(.primary)

Text(recommendation.description ?? "")

.font(.subheadline)

.foregroundStyle(.secondary)

}

})

Section(header: Text("Reasoning"), content: {

Text(recommendation.reason ?? "")

})

}

}

.animation(.smooth, value: coffeeIntelligence.recommendation)

.animation(.smooth, value: coffeeIntelligence.session.isResponding)

}

}import SwiftUI

import FoundationModels

struct ContentView: View {

@State var coffeeIntelligence: CoffeeIntelligence = .init()

@State var prompt: String = ""

var body: some View {

List {

TextField("How do you like your coffee?", text: $prompt)

Button(action: {

Task {

try? await coffeeIntelligence.generate(prompt: prompt)

}

}, label: {

HStack {

Image(systemName: "sparkles")

Text("Generate")

Spacer()

if coffeeIntelligence.session.isResponding {

ProgressView()

}

}

.font(.headline.weight(.semibold))

})

.disabled(coffeeIntelligence.session.isResponding)

if let recommendation = coffeeIntelligence.recommendation {

Section(header: Text("Recommendation"), content: {

VStack(alignment: .leading) {

Text(recommendation.name ?? "")

.font(.headline)

.foregroundStyle(.primary)

Text(recommendation.description ?? "")

.font(.subheadline)

.foregroundStyle(.secondary)

}

})

Section(header: Text("Reasoning"), content: {

Text(recommendation.reason ?? "")

})

}

}

.animation(.smooth, value: coffeeIntelligence.recommendation)

.animation(.smooth, value: coffeeIntelligence.session.isResponding)

}

}Debugging

There's a neat trick you can do to debug your models - print out the entire chain of thinking. This will let you see the prompt, the result, and any tools that were called. If you're seeing results you don't expect, this is a very handy tool!

In debug mode, I've added this below my stream of partial responses, so I can check how it got to the result it did.

for entry in session.transcript.entries {

print(entry)

}for entry in session.transcript.entries {

print(entry)

}Wrapping Up

Tool calling adds a lot of potential to your local models, and I'm already putting it into use across my suite of apps.

If you have questions, or something cool to share, I'm @SwiftyAlex on twitter.